The challenge

Vision Language Models (VLMs) have the potential to revolutionize robotics, but their utility is hindered by challenges generating accurate and reliable visual descriptions. As a result, traditional assistive technologies often provide only limited mobility and spatial awareness.

The solution

Biped.ai incorporates depth information and images captured by RealSense™ Depth Module D430 for essential tasks such as scene understanding, object recognition, and generative AI descriptions.

The results

Equipped with 3D vision and advanced AI algorithms, Biped.ai is poised to revolutionize the way blind and visually impaired individuals move through their worlds.

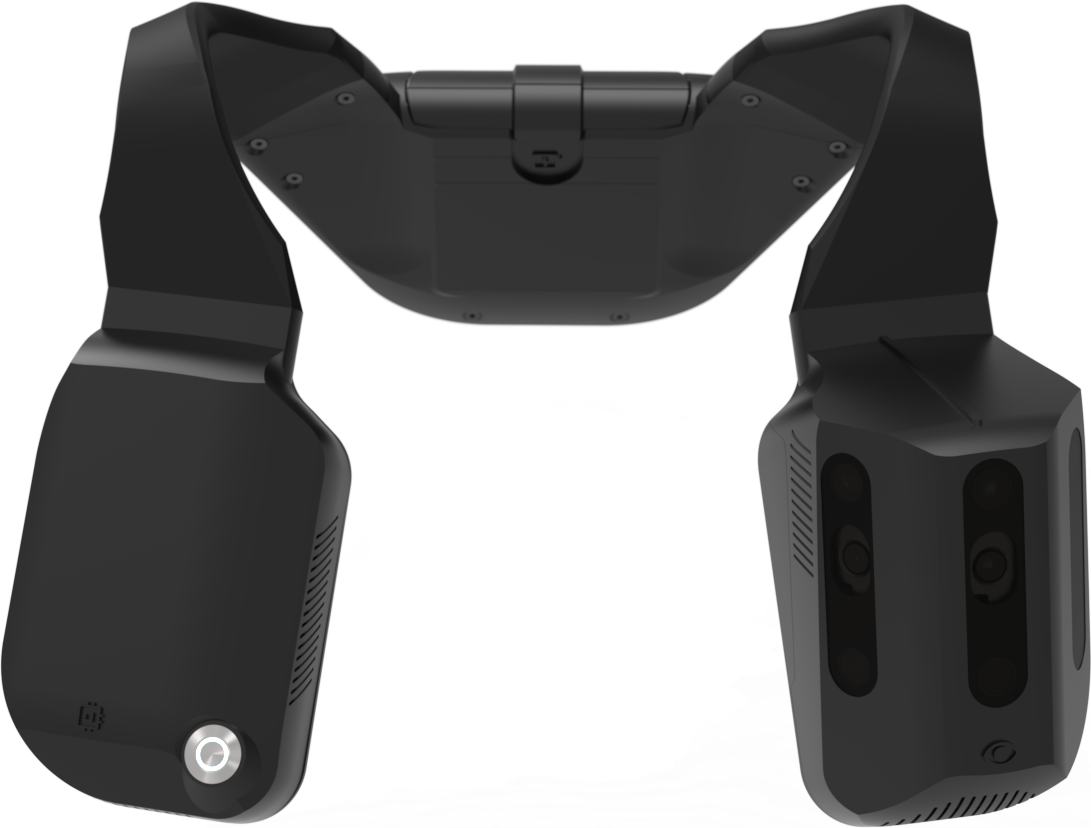

Introducing NOA: The AI-Powered Mobility Vest for the Visually Impaired

Cloudpick leverages a powerful combination of AI, computer vision, and deep learning to power its smart stores. Their system relies heavily on RealSense Depth Cameras, which capture a 3D representation of the store, enabling gExperience a revolutionary approach to mobility for the visually impaired. NOA, developed by Biped.ai, is a cutting-edge mobility vest that empowers users with real-time guidance, obstacle detection, and environmental descriptions, enabling greater independence and confidence in their daily lives.esture recognition and monitoring of customer behavior. This allows for accurate tracking of products from the shelves to the virtual shopping cart.

Spacial Awareness

AI-Driven Spatial Awareness

NOA leverages self-driving vehicle software co-developed with Honda Research Institute. By fusing turn-by-turn GPS instructions with visual data, it offers precise, context-aware navigation, enabling users to traverse complex environments like city streets, crowded pathways, and public transport hubs.

Obstacle Detection

Real-Time Obstacle Detection

AI Descriptions

Generative AI Descriptions

When users press a button on the vest, NOA activates its generative AI capability, describing nearby activities such as “crossing a street” or “entering a shop.” This enhanced awareness enables better decision-making in unfamiliar surroundings.

Seamless Integration and Scalability

The D430’s native support of the RealSense SDK 2.0 accelerates development with pre-built tools for image pre-processing, multi-camera sync, and IMU data processing. As a result, Biped.ai’s team fast-tracked the prototyping process, cutting down on engineering hours and ensuring a faster time-to-market.

D430 Module: The Vision Behind NOA

The RealSense Depth Module D430 serves as the core of NOA’s vision system, offering precise depth perception, wide field of view, and high-resolution image capture. This ensures that users receive accurate, real-time obstacle alerts, even in complex and fast-changing surroundings. Designed to function in diverse conditions, the D430’s ability to handle RGB, infrared, and depth data ensures consistent performance, even in low-light settings—a crucial factor for users with light sensitivity.

“RealSense technology provides high-quality depth perception and visual information. This enables NOA to accurately detect objects, avoid obstacles, and create detailed 3D maps of the surrounding environment.”

— Mael Fabien,

Co-founder, Biped.ai